In the complex world of hardware design verification, success isn’t achieved through a single technique or methodology. Instead, it rests on three fundamental pillars that work together to ensure comprehensive and reliable verification: Stimulus, Checking, and Coverage. Understanding these pillars and their interconnected relationship is crucial for any verification engineer seeking to build robust, thorough verification environments.

Think of these pillars as the legs of a three-legged stool. Remove any one of them, and the entire verification effort becomes unstable and unreliable. Each pillar serves a distinct purpose, yet they complement and reinforce each other to create a verification framework that can tackle even the most complex digital designs.

Pillar 1: Stimulus – Exercising Your Design

The first pillar, Stimulus, is all about driving your design under test (DUT) with meaningful inputs that explore its behavior across all possible scenarios. Without proper stimulus, your verification environment is like a detective trying to solve a case without asking the right questions—you might get lucky, but you’re more likely to miss critical information.

The Art of Stimulus Generation

Effective stimulus generation goes far beyond simply applying random values to your design’s inputs. It requires a deep understanding of your design’s intended behavior, its corner cases, and the real-world conditions it will encounter. The goal is to create stimulus that is both comprehensive and intelligent, exercising not just the obvious functionality but also the edge cases where bugs often hide.

Consider a simple FIFO (First-In-First-Out) buffer as an example. Basic stimulus might involve writing some data and then reading it back. However, comprehensive stimulus would include scenarios such as:

- Back-to-back writes until the FIFO is full

- Attempting to write to a full FIFO

- Back-to-back reads until the FIFO is empty

- Attempting to read from an empty FIFO

- Simultaneous read and write operations

- Reset conditions during various FIFO states

- Different data patterns including all zeros, all ones, and alternating patterns

Constrained Random vs. Directed Testing

Modern verification leverages two primary stimulus approaches: constrained random testing and directed testing. Constrained random testing uses intelligent randomization within defined boundaries to explore the design space efficiently. This approach excels at finding unexpected bugs by exercising combinations of inputs that human testers might not consider.

For our FIFO example, constrained random stimulus might randomly vary the timing between reads and writes, the amount of data written in each burst, and the patterns of data, all while respecting the FIFO’s capacity constraints. This approach can uncover subtle timing-related bugs or issues with specific data patterns.

Directed testing, on the other hand, targets specific functionality or known challenging scenarios. These tests are carefully crafted to verify particular features or to reproduce specific conditions. For the FIFO, directed tests might focus on verifying the exact behavior when transitioning from empty to full states, or ensuring proper flag generation under specific timing conditions.

Practical Stimulus Implementation

In SystemVerilog, stimulus generation often involves classes with randomization capabilities. Here’s a simplified example for our FIFO:

class fifo_stimulus;

rand bit [7:0] data;

rand bit write_enable;

rand bit read_enable;

rand int delay;

constraint valid_delays {

delay inside {[0:10]};

}

constraint realistic_operations {

// Prevent simultaneous read and write initially

!(write_enable && read_enable);

}

endclass

This approach allows the verification environment to generate thousands of different stimulus scenarios automatically, each exploring different aspects of the FIFO’s behavior.

Pillar 2: Checking – Catching the Bugs

The second pillar, Checking, is responsible for determining whether your design behaves correctly under the applied stimulus. This pillar transforms your verification environment from a simple exercise generator into a true bug-hunting machine. Without effective checking, you might run millions of simulation cycles without detecting critical bugs, creating a false sense of security.

Levels of Checking

Checking operates at multiple levels, from low-level protocol verification to high-level functional correctness. Each level serves a specific purpose and catches different types of bugs.

Protocol-level checking ensures that your design adheres to interface specifications and timing requirements. For our FIFO example, this might include verifying that the full and empty flags are asserted at the correct times, or ensuring that read operations don’t occur when the FIFO is empty.

Functional checking verifies that the design implements the intended functionality correctly. This involves checking that data written to the FIFO is read back in the correct order (first-in, first-out behavior), that the FIFO capacity is respected, and that all operations produce the expected results.

Data integrity checking ensures that information isn’t corrupted as it passes through the design. For the FIFO, this means verifying that the data read from the FIFO exactly matches what was previously written.

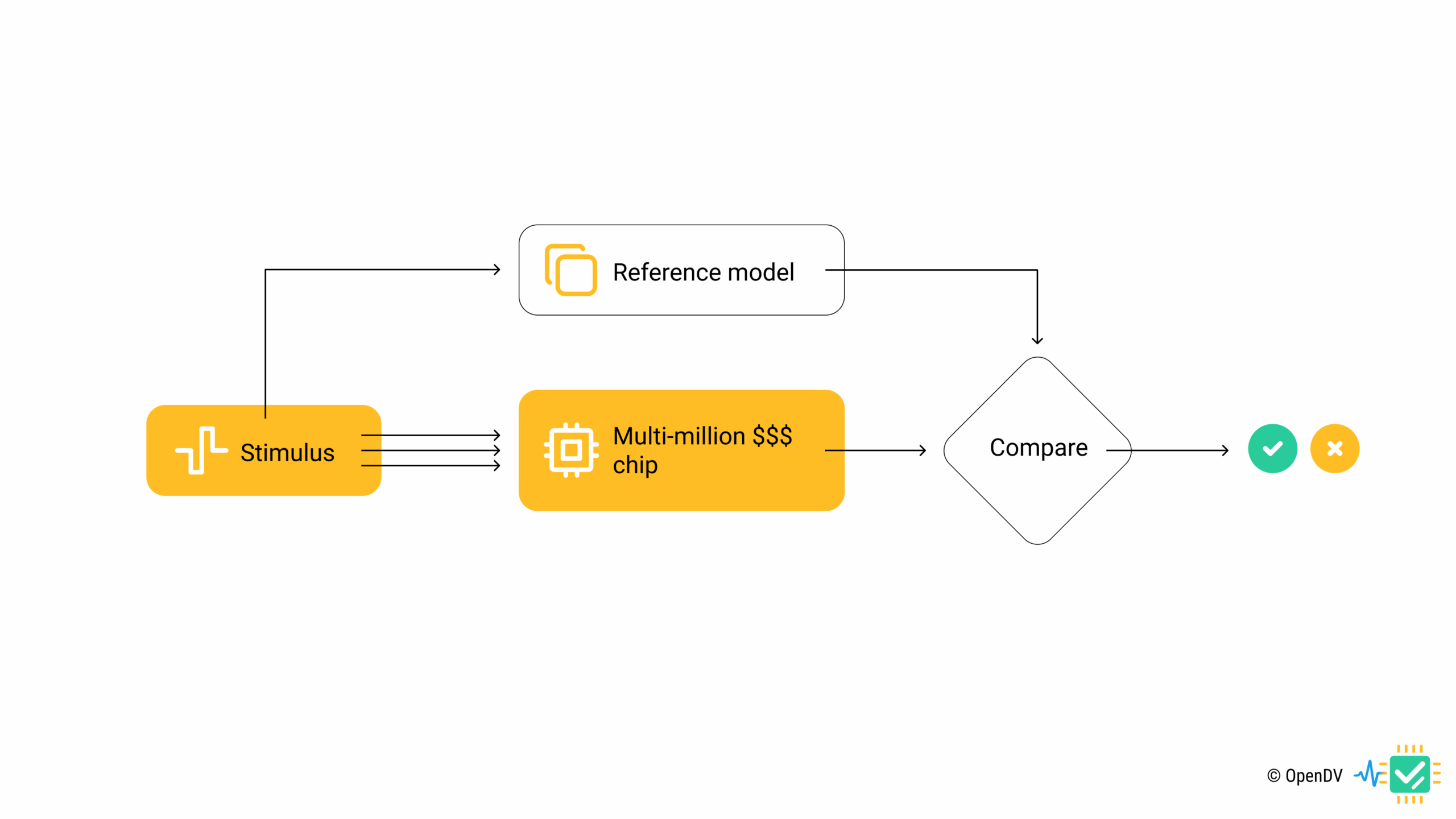

Self-Checking Testbenches

Modern verification environments implement self-checking capabilities that automatically detect and report errors without human intervention. This automation is crucial for running the extensive test suites required for thorough verification.

A typical self-checking implementation for our FIFO might include:

- A reference model that predicts expected behavior

- Comparisons between actual and expected outputs

- Automatic flagging of mismatches

- Detailed error reporting for debugging

Assertion-Based Verification

SystemVerilog Assertions (SVA) provide a powerful mechanism for continuous checking throughout simulation. Unlike procedural checks that execute at specific points, assertions monitor design behavior continuously and can catch violations as soon as they occur.

For our FIFO example, assertions might include:

// FIFO should never be both full and empty

assert property (@(posedge clk) !(full && empty));

// After reset, FIFO should be empty

assert property (@(posedge clk) $past(reset) |-> empty);

// Data integrity check

assert property (@(posedge clk) (read_enable && !empty) |->

(read_data == $past(expected_data)));

These assertions provide continuous monitoring and catch violations immediately, making debugging much more efficient.

Pillar 3: Coverage – Measuring Progress

The third pillar, Coverage, answers the critical question: “How do we know when we’re done?” Coverage provides quantitative metrics that measure the thoroughness of your verification effort and guide you toward areas that need additional attention.

Types of Coverage

Coverage comes in several forms, each providing different insights into verification completeness.

Code coverage measures which parts of your design’s code have been exercised during simulation. This includes line coverage (which lines of code were executed), branch coverage (which decision paths were taken), and expression coverage (which sub-expressions were evaluated). While high code coverage doesn’t guarantee bug-free design, low code coverage definitely indicates insufficient testing.

Functional coverage measures whether your verification environment has exercised the intended functionality of your design. Unlike code coverage, which is automatically derived from the design, functional coverage must be explicitly defined based on your design’s specification and requirements.

For our FIFO example, functional coverage might track:

- All possible FIFO fill levels (empty, partially full, completely full)

- All types of operations (read, write, simultaneous read/write)

- All transition scenarios (empty-to-full, full-to-empty, etc.)

- Different data patterns and their combinations

Assertion coverage measures which assertions have been exercised and proven during simulation. This helps ensure that your assertion-based checks are actually being validated, not just sitting dormant in your code.

Implementing Coverage in Practice

SystemVerilog provides robust coverage constructs that integrate seamlessly into your verification environment. Here’s an example of functional coverage for our FIFO:

covergroup fifo_coverage @(posedge clk);

fill_level: coverpoint fill_count {

bins empty = {0};

bins partial = {[1:FIFO_DEPTH-1]};

bins full = {FIFO_DEPTH};

}

operations: coverpoint {write_enable, read_enable} {

bins write_only = {2'b10};

bins read_only = {2'b01};

bins idle = {2'b00};

bins simultaneous = {2'b11};

}

// Cross coverage for comprehensive scenarios

fill_ops: cross fill_level, operations;

endgroup

This coverage model ensures that all combinations of FIFO fill levels and operations are exercised during verification.

Coverage-Driven Verification

Coverage metrics don’t just measure progress—they actively guide verification efforts. Coverage-driven verification uses coverage feedback to automatically adjust stimulus generation, focusing effort on unexplored areas of the design space.

When coverage analysis reveals gaps, the verification team can either enhance existing tests or create new directed tests to hit the uncovered scenarios. This iterative process continues until coverage goals are met, ensuring thorough verification across all intended functionality.

The Synergy of the Three Pillars

The true power of simulation-based verification emerges when all three pillars work together synergistically. Stimulus drives the design, checking catches bugs, and coverage measures progress, but their interaction creates capabilities greater than the sum of their parts.

Stimulus informs checking: Understanding what stimulus is being applied helps design appropriate checks. If your stimulus includes corner cases like simultaneous operations, your checking mechanisms must be sophisticated enough to handle these scenarios.

Checking validates stimulus: Effective checking can reveal when stimulus isn’t actually exercising the intended scenarios. If checks consistently pass without any interesting failures, it might indicate that the stimulus isn’t challenging the design sufficiently.

Coverage guides stimulus: Coverage analysis reveals which scenarios haven’t been adequately tested, guiding the development of new stimulus to fill these gaps.

Coverage validates checking: If coverage shows that certain scenarios have been exercised but no checks have fired, it might indicate missing or inadequate checking mechanisms.

Advanced Considerations

As designs become more complex, the three pillars must evolve to handle new challenges. Modern verification environments often incorporate machine learning techniques to optimize stimulus generation, formal verification methods to enhance checking capabilities, and sophisticated coverage analysis to handle the explosion of possible design states.

Constrained random stimulus becomes more intelligent, using coverage feedback to bias random generation toward unexplored areas. Assertion-based verification expands beyond simple property checking to include complex temporal properties and cross-module interactions. Coverage analysis incorporates not just functional coverage but also performance coverage, power coverage, and system-level coverage metrics.

Practical Implementation Strategy

Building a verification environment based on these three pillars requires a systematic approach. Start by thoroughly understanding your design specification and identifying all the scenarios that need verification. Develop your stimulus generation to cover these scenarios comprehensively, implement checking mechanisms that can detect violations reliably, and define coverage metrics that accurately measure verification progress.

Begin with simple implementations of each pillar and gradually increase sophistication. Early stimulus might be largely directed, with constrained random elements added as the environment matures. Initial checking might focus on basic functional correctness, expanding to include protocol compliance and performance verification. Coverage typically starts with basic functional coverage and grows to include complex cross-coverage and system-level metrics.

Conclusion

The three pillars of simulation-based verification—Stimulus, Checking, and Coverage—provide a proven framework for tackling even the most complex verification challenges. By understanding each pillar’s role and their synergistic relationship, verification engineers can build environments that are not only thorough and reliable but also efficient and maintainable.

Success in verification isn’t about implementing the most sophisticated tools or running the most tests—it’s about thoughtfully applying these three fundamental principles to create a verification strategy that provides confidence in your design’s correctness. Whether you’re verifying a simple FIFO or a complex multi-core processor, these pillars will guide you toward verification success.

Remember that verification is both an art and a science. While the three pillars provide the scientific framework, the art lies in knowing how to apply them effectively for your specific design and requirements. Master these pillars, understand their interactions, and you’ll have the foundation needed to verify any digital design with confidence.