If you’re new to hardware verification, you’ve probably heard it’s one of the most challenging parts of chip design. You need to create tests that thoroughly exercise your hardware, catch bugs when they occur, and prove your design works correctly. Traditionally, this requires years of experience and deep expertise. But Large Language Models (LLMs) like ChatGPT and Claude are changing the game, making verification more accessible and efficient than ever before.

What Makes Verification So Hard?

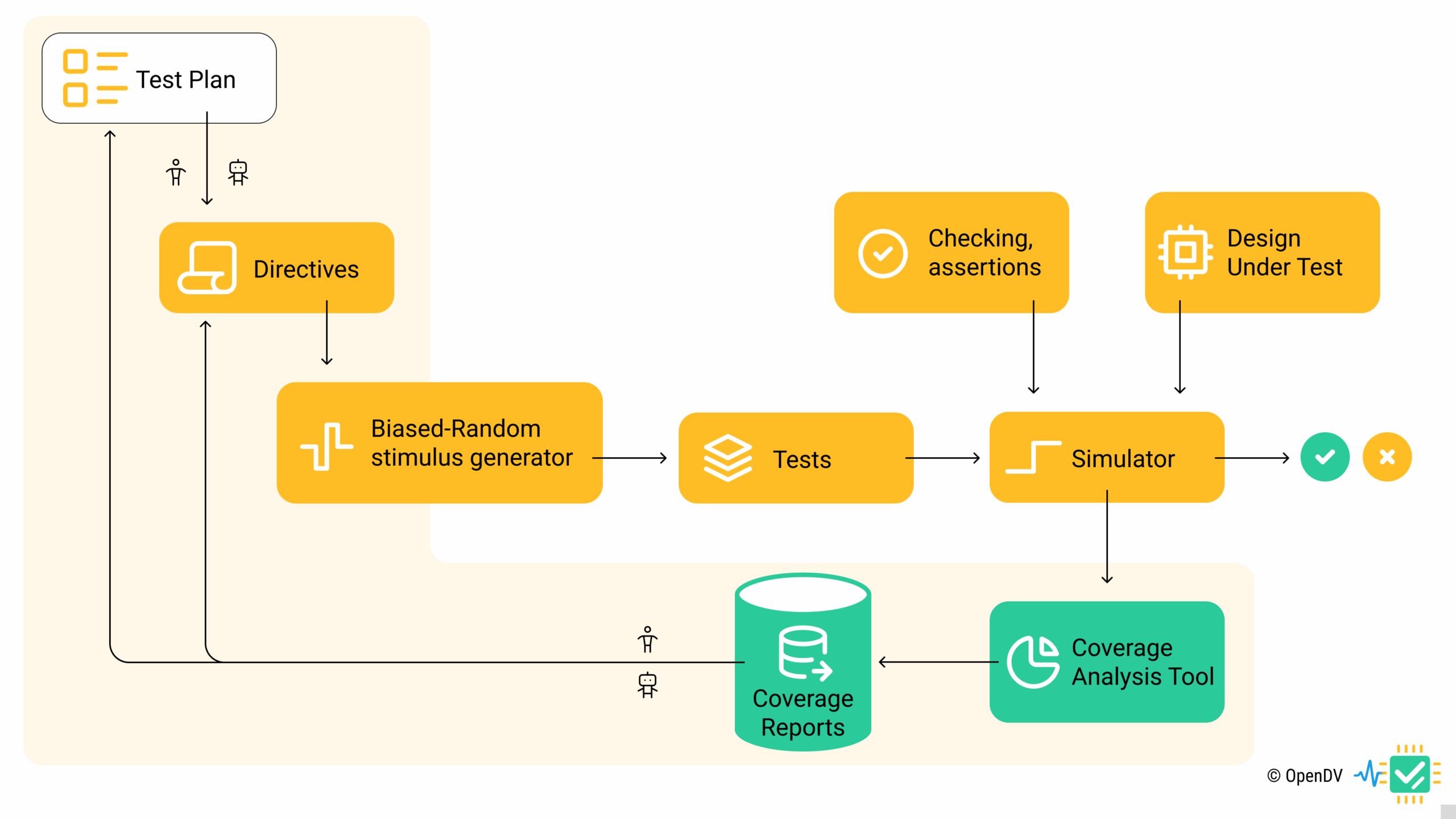

Before diving into how LLMs help, let’s understand the challenge. Hardware verification involves three main tasks:

- Creating tests (stimulus) that exercise your design

- Checking that the design behaves correctly

- Measuring coverage to know when you’re done

Each of these requires specialized knowledge, and missing any piece can leave critical bugs undiscovered. This is where LLMs become game-changers.

1. Automatic Test Generation – Your AI Test Writer

The most immediate impact of LLMs is in generating test cases. Instead of spending days writing individual tests, you can describe what you want to test and get comprehensive test suites instantly.

Real Example: Testing a FIFO Buffer

Let’s say you need to verify a simple FIFO (First-In-First-Out) buffer. Here’s how an LLM helps:

Your request: “Generate SystemVerilog tests for a 16-deep FIFO that handles 8-bit data. Include normal operation, overflow, underflow, and reset scenarios.”

What you get: Complete test cases including:

- Filling the FIFO completely and checking the full flag

- Reading from an empty FIFO to test underflow protection

- Writing to a full FIFO to test overflow handling

- Reset during various FIFO states

- Back-to-back read/write operations

The LLM doesn’t just create basic tests—it thinks of edge cases you might miss. For instance, it might generate tests for simultaneous read and write operations, or specific data patterns that could reveal subtle bugs.

Beyond Basic Tests

LLMs excel at creating sophisticated test generators. Ask for a “constrained random test class for memory transactions,” and you’ll get code that generates thousands of realistic memory access patterns automatically. This would typically take an experienced engineer several days to write properly.

2. Instant Code Generation – Your Verification Assistant

Starting a new verification project usually means writing lots of boilerplate code—drivers, monitors, scoreboards, and test environments. LLMs can generate entire verification frameworks in minutes.

Example: SPI Interface Testbench

Your request: “Create a complete UVM testbench for an SPI master with configurable clock modes.”

What you get:

- Complete testbench structure with all necessary components

- Driver that can send SPI transactions with proper timing

- Monitor that captures and checks SPI bus activity

- Scoreboard that verifies data integrity

- Configuration classes for different SPI modes

- Ready-to-run test examples

This saves weeks of initial setup work, letting you focus on the unique aspects of your design rather than recreating standard verification infrastructure.

3. Smart Bug Hunting – Your Debug Partner

When tests fail, finding the root cause can be like searching for a needle in a haystack. LLMs transform this detective work by analyzing simulation logs, waveforms, and error patterns.

Example: Cache Controller Debug

Your situation: Your cache controller test fails with assertion violations and corrupted data.

Your request: “My cache test failed with coherency assertions firing. Here’s the simulation log and error messages…”

What the LLM provides:

- Analysis of error patterns and timing relationships

- Identification of potential root causes (e.g., “The errors occur during specific memory access patterns”)

- Specific debug recommendations (“Check the coherency protocol state machine during back-to-back cache misses”)

- Suggested additional tests to isolate the issue

The LLM can process thousands of lines of logs instantly, spotting patterns that would take humans hours to identify.

4. Coverage Analysis Made Simple

Coverage tells you how thoroughly you’ve tested your design, but interpreting coverage reports requires expertise. LLMs make this accessible to beginners.

Example: Processor Verification

Your situation: You have 95% functional coverage but are still finding bugs.

Your request: “Help me analyze my processor coverage database. I’m missing something important.”

What the LLM discovers:

- Individual features are well-covered, but certain combinations haven’t been tested

- Interrupt handling during cache operations is missing

- Specific instruction sequences that could cause pipeline hazards haven’t been exercised

- Recommendations for additional coverage points and test scenarios

This type of analysis typically requires senior verification engineers, but LLMs can provide similar insights to anyone.

5. Learning While You Work

One of the most valuable aspects of using LLMs for verification is that they teach you while helping you work. Unlike traditional tools that just execute commands, LLMs explain what they’re doing and why.

Example: Learning UVM

Your question: “I’m new to UVM. Can you create a simple test sequence and explain how it works?”

What you get:

- Working code with detailed explanations

- Step-by-step breakdown of execution flow

- Common pitfalls and how to avoid them

- Best practice recommendations

- Suggestions for next steps in learning

This makes verification knowledge more accessible, helping teams scale their capabilities quickly.

6. Protocol Expertise On-Demand

Modern designs use numerous communication protocols—USB, PCIe, Ethernet, and more. LLMs serve as instant experts on these protocols.

Example: USB 3.0 Verification

Your request: “I need to verify a USB 3.0 controller. What are the key test scenarios I should focus on?”

What you get:

- Comprehensive test plan covering all USB 3.0 features

- Specific edge cases and error conditions to test

- Timing requirements and protocol compliance checks

- Test prioritization based on risk and complexity

- Code examples for key test scenarios

This eliminates the need to spend weeks studying protocol specifications before starting verification.

Tools You Can Use Today

The good news is that you don’t need expensive, specialized software to start using LLMs for verification. Here are some readily available options:

Free Options:

- ChatGPT (Free tier): Excellent for code generation, debugging help, and learning SystemVerilog/UVM concepts

- Claude (Free tier): Great for analyzing large code bases and complex debugging scenarios

- GitHub Copilot: Integrated directly into VS Code and other editors, provides real-time code suggestions for verification code

- Perplexity AI: Useful for researching protocols and standards with up-to-date information

Paid Options (More Powerful):

- ChatGPT Plus: Faster responses and access to GPT-4, better for complex verification tasks

- Claude Pro: Enhanced capabilities for analyzing large files and complex scenarios

- GitHub Copilot Pro: Advanced code completion and chat features integrated into your development environment

Getting Started Example: Try this with ChatGPT or Claude: “Create a simple SystemVerilog testbench for a 4-bit counter with enable and reset. Include assertions to check counting behavior.”

You’ll get working code with explanations that you can immediately use and learn from.

Getting Started: Practical Tips

Start Simple: Begin with basic code generation and test creation before tackling complex debug scenarios.

Provide Context: The more details you give about your design and requirements, the better the results. Share interface specifications, timing requirements, and any special considerations.

Iterate and Refine: Use the LLM’s output as a starting point, then refine your requests based on the initial results.

Always Verify: LLM-generated code should be reviewed and tested. These tools are powerful assistants, not replacements for engineering judgment.

Learn as You Go: Pay attention to the explanations and techniques the LLM uses—you’ll quickly pick up verification best practices.

What to Expect and What to Watch For

LLMs are incredibly powerful, but they have limitations. They can occasionally generate code with subtle errors or miss domain-specific nuances. Always test and validate their output, especially for critical verification tasks.

However, the benefits far outweigh the challenges. LLMs democratize verification expertise, making advanced techniques accessible to beginners while dramatically increasing productivity for experienced engineers.

The Bottom Line

Large Language Models are transforming hardware verification from an expert-only field into something more accessible and efficient. Whether you’re generating tests, debugging failures, analyzing coverage, or learning new concepts, LLMs provide intelligent assistance that can accelerate your verification projects and improve their quality.

The key is starting with simple applications and gradually expanding your use as you become more comfortable with the technology. With LLMs as your verification partner, you can tackle complex verification challenges with confidence, even as a beginner.

The future of verification is more intelligent, more accessible, and more efficient—and that future is available today through LLM-enhanced verification workflows.